Facebook has finally published its community guidelines for the first time, as it attempts to rebuild the confidence with its 2.2 billion users. The policy covers what should and shouldn’t be published on its platform and tries to distance itself from fake news, hate speech and opioid sales.

Moderation by AI

It is understood that while Facebook has always had community guidelines, its internal moderation team – believed to be 7,500 people – were confused about what should be allowed. During the recent appearance of Facebook CEO Mark Zuckerberg at Congress, he said AI was the best way to deal with the inappropriate content issue, rather than using moderators. It is thought that the Facebook internal team focuses on flagged content.

While there is some room for machine-assisted moderation, Facebook cannot purely rely on AI. The big failure of AI is the inability to understand the context of a conversation and spot when people are being sarcastic. Natural Language Processing has, to date, had difficulties in looking at language, context and reasoning. When users make up their own codes to get around talking about inappropriate topics, it is going to take the technology time to catch up with this. The human moderator is likely to spot this quickly as it can understand the context.

The Nitty Gritty: Facebook’s Community Standards

Facebook’s community standards released on 24 April are based on the following principles:

- Safety

- Voice

- and Equity

Publishing the standards, the networking giant said: “Everyone on Facebook plays a part in keeping the platform safe and respectable. We ask people to share responsibly and to let us know when they see something that may breach our Community Standards.”

The entire standards are published on Facebook’s website and found here. The standards have six sections covering violence and criminal behaviour, safety, objectionable content, integrity and authenticity, respecting intellectual property and content-related requests.

Under each section, there is a clear list of what is blocked. In the first section of violence and criminal behaviour, terrorist activity, organised hate, mass or serial murder, human trafficking, organised violence or criminal activity and regulated goods will all be blocked. Under safety, child nudity and sexual exploitation of children will be blocked, even if there are good intentions. The standards also mention false news, claiming that satire is allowed, but false news won’t be removed, it will significantly reduce its distribution in the news feed.

Content-related requests mean that Facebook will remove any user’s request of their own account, removal of a deceased or incapacitated user’s account, provided it comes from an authorised representative. It will also remove any accounts where the owner is under 13 years old.

To enforce the standards, Facebook also warned that if users continue to breach its standards, they will be banned, and in some cases, they will contact the police if there is a genuine risk of physical harm or direct threat to public safety.

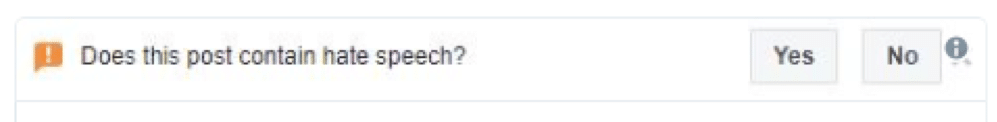

The release of the standards was followed by Facebook adding a hate button on any content published, although this has only been rolled out to US users. On 1 May, users saw the following under some of their posts:

It does appear as though Facebook is taking its position as a respectable news carrier seriously and so it should do. What remains is to see whether these standards are going to be enough to clean up the platform. Facebook has acted swiftly to eradicate inappropriate content as it cannot afford to be embroiled in another scandal.

Brands and Community Guidelines

Any conscientious brand would have a policy on how it expects its users to behave whether that is on its website, social platform, online community, telephone or face to face.

In fact, The Social Element works with all its clients drawing up a 32-page moderation guidelines bible on how our moderation team should deal with inappropriate content. Our guidelines have over 20 sections, such as libel, illegal activities, impersonation, distressing content, sexual content and privacy, with clear instructions on exactly what to do.

Ambiguity is always going to stump a machine and we know how complicated the English language can be. The future of moderation is a combination of machine-assisted technology but led by humans. No system is going to be 100% fail proof but having a machine do the heavy lifting by eradicating the easily identifiable inappropriate content, leaving the human moderators to deal with the more complex moderation is the way forward. Regulated industries have no choice about whether it is human or machine assisted moderation – all moderation has to be performed by a human or they are fined by the industry regulators.

There has to be this stringent process in place to ensure a safe environment so users know what to expect and how to behave respectfully when they are interacting with other users. This also allows the moderation team and decisions to be consistent when enforcing them. Good moderation guidelines ensure there are no grey areas when dealing with content. The guidelines need to be either black or white. Either it is there or it isn’t.

[author] [author_image timthumb=’on’]https://thesocialelement.agency/wp-content/uploads/2018/04/Lisa-Barnett.jpeg[/author_image] [author_info]Lisa Barnett is The Social Element’s Innovation & Content Director, offering social media services for strategy, content and insights. She has over 18 years experience in running and managing social networks, as well as B2B and B2C communities. She had previously worked in IT journalism for over 10 years.[/author_info] [/author]